I have yet to see an enterprise AI program fail because of a weak model. Most of them struggle, or quietly stall, because data never reaches the model in a form that survives real operating conditions.

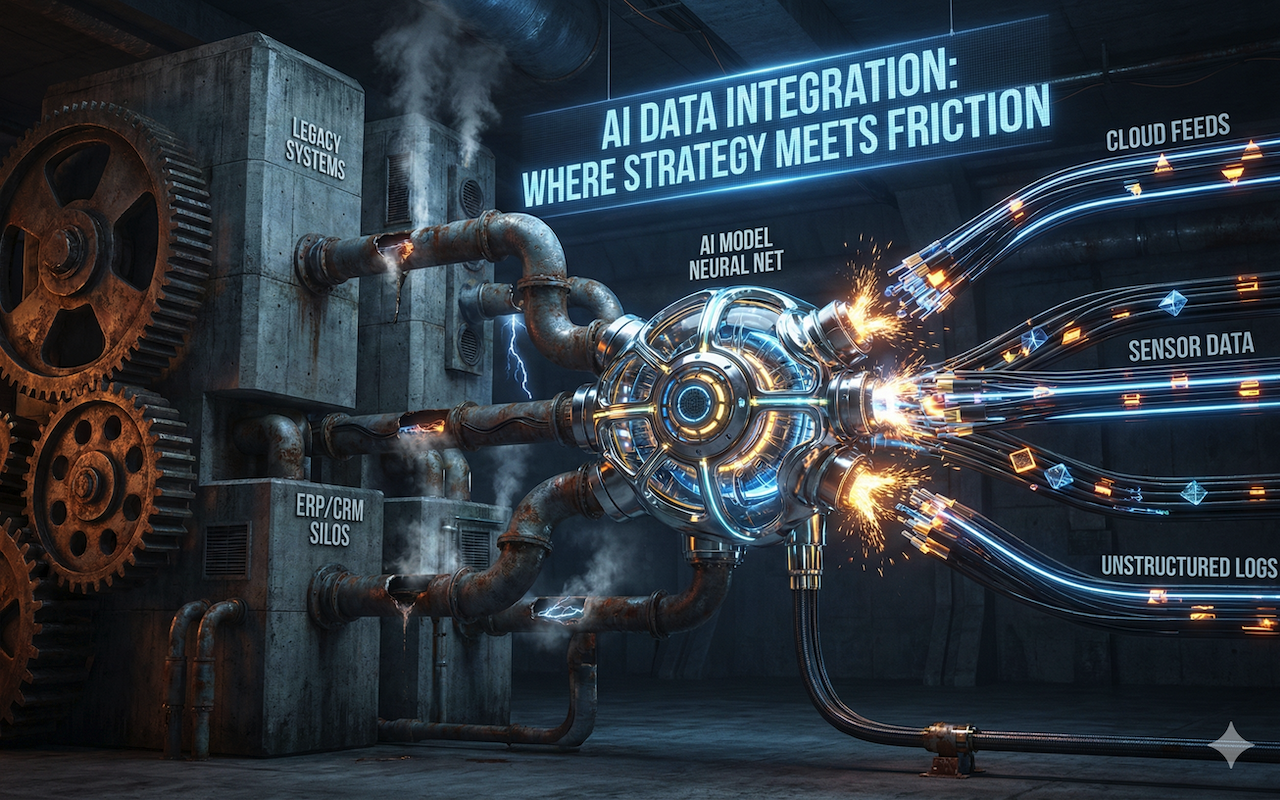

AI data integration sounds straightforward on paper: connect systems, move data, feed intelligence. In practice, it is where strategy meets friction—legacy platforms that were never designed to share data, cloud architectures evolving faster than governance models, and AI teams asking for data that technically exists but operationally can’t be trusted.

By the time organizations reach this stage, the question is no longer whether AI data integration matters. It’s whether they can make it stable enough to support decisions that actually carry risk.

How AI Changed the Meaning of “Data Integration”

Traditional data integration was built around predictability. You knew the source systems, the schemas changed slowly, and downstream consumers were humans reading reports. If data arrived a few hours late, nobody panicked.

AI changes the contract entirely.

Models consume data continuously. They combine transactional records with logs, text, sensor data, and signals that were never meant to coexist. Small inconsistencies propagate quickly. A delayed feed doesn’t just delay insight; it degrades output quality in ways that are hard to diagnose.

This is where many enterprises misjudge the problem. They treat AI data integration as a scaled-up version of ETL. It isn’t. It’s closer to running an always-on nervous system, where latency, drift, and context loss all have visible consequences.

The First Constraint Is Almost Always Legacy Systems

Every serious AI data integration effort runs into the same wall early on: systems that still matter but were never built to participate in modern data flows.

Mainframes, proprietary ERPs, heavily customized CRM platforms—these systems hold authoritative data, yet exposing that data safely and consistently is non-trivial. Interfaces are brittle. Change windows are limited. Documentation is outdated or incomplete.

You can wrap them in APIs, replicate data downstream, or event-enable them incrementally. Each option carries trade-offs. Replication improves accessibility but introduces freshness risk. Real-time access preserves accuracy but stresses systems that were never designed for it.

There is no perfect solution here. The only mistake is pretending the constraint doesn’t exist.

Where AI Helps and Where It Quietly Falls Short

AI-assisted data integration has improved meaningfully in recent years, particularly around schema inference, anomaly detection, and data quality monitoring. These capabilities reduce manual effort and surface issues faster than traditional rule-based approaches.

That said, AI does not eliminate judgment.

In real enterprise environments, false positives still occur. Automated mappings can look correct but encode subtle semantic errors. Anomalies flagged by intelligent systems still need context to interpret—is this a genuine data issue, or a legitimate business change?

The teams that succeed treat AI as a force multiplier, not an autopilot. Integration logic still requires human accountability, especially when downstream AI systems influence financial, operational, or regulatory outcomes.

Integration Breaks When Architecture and Ownership Drift Apart

One of the less discussed failure modes in AI data integration is organizational rather than technical.

Data platforms are owned by one team. AI models by another. Security policies by a third. When integration spans all three without clear accountability, pipelines become fragile. Fixes are slow. Ownership is ambiguous. Everyone assumes someone else is monitoring quality.

The strongest integration programs align data flows with business domains rather than tools. When ownership is clear—such as a pipeline supporting fraud detection or forecasting—decisions are made faster and trade-offs are explicit.

Without that alignment, even well-designed architectures decay under operational pressure.

Hybrid and Multi-Cloud: Necessary, Not Elegant

Most enterprises now operate across multiple clouds and on-prem environments, often by necessity rather than choice. AI data integration in this context is rarely clean.

Data gravity matters. Compliance constraints matter. So do cost controls that surface months after initial architecture decisions. Moving data freely is easy in theory and expensive in practice.

Successful teams design integration paths that minimize movement, accept some duplication, and prioritize consistency over purity. They choose where intelligence runs based on where data already lives, not where the tooling is most fashionable.

It’s a compromise, but one that survives real budgets and audits.

Governance Is the Difference Between Scale and Stall

AI systems magnify the consequences of poor governance. Once integrated pipelines feed multiple models, a single access control gap or lineage blind spot becomes a systemic risk.

The mistake many teams make is layering governance after integration stabilizes. By then, retrofitting controls is painful and politically charged.

The better approach embeds governance into pipelines from the start—data classification, access enforcement, traceability. Not because it’s elegant, but because it prevents the kind of late-stage surprises that freeze AI programs indefinitely.

Governance slows nothing if it’s designed properly. It only slows teams when it’s treated as an afterthought.

When AI Data Integration Does Not Fully Deliver

It’s worth saying plainly: not every integration effort justifies its cost.

Some AI use cases remain too sensitive to data noise. Others require context that integration alone cannot provide. In certain environments, the operational overhead outweighs the benefits, and simpler analytical approaches remain more reliable.

Recognizing these limits is not failure. It’s discipline.

The goal is not universal integration. It’s targeted, trustworthy data movement aligned to decisions that matter.

What Maturity Actually Looks Like

Mature AI data integration is rarely flashy. Pipelines are boring. Failures are visible early. Data consumers trust what they receive, even when it’s incomplete.

Teams spend less time fixing breakages and more time refining how data supports decision-making. Integration becomes a capability, not a project.

Organizations that reach this stage tend to move faster not because they automate more—but because they argue less about whose data is correct.

Conclusion

AI data integration is not about elegance or speed alone. It’s about durability. The systems that last are those designed with friction in mind—technical, organizational, and regulatory.

Enterprise teams that approach integration with humility, clear ownership, and respect for legacy realities build foundations that AI can actually stand on. Those who chase perfection usually end up rebuilding.

At this stage, organizations often seek external support as experimentation gives way to production pressure and integration must operate reliably under real-world constraints. This transition marks the point at which AI data integration moves from theoretical design to operational execution.

Featured Image generated by Google Gemini.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment