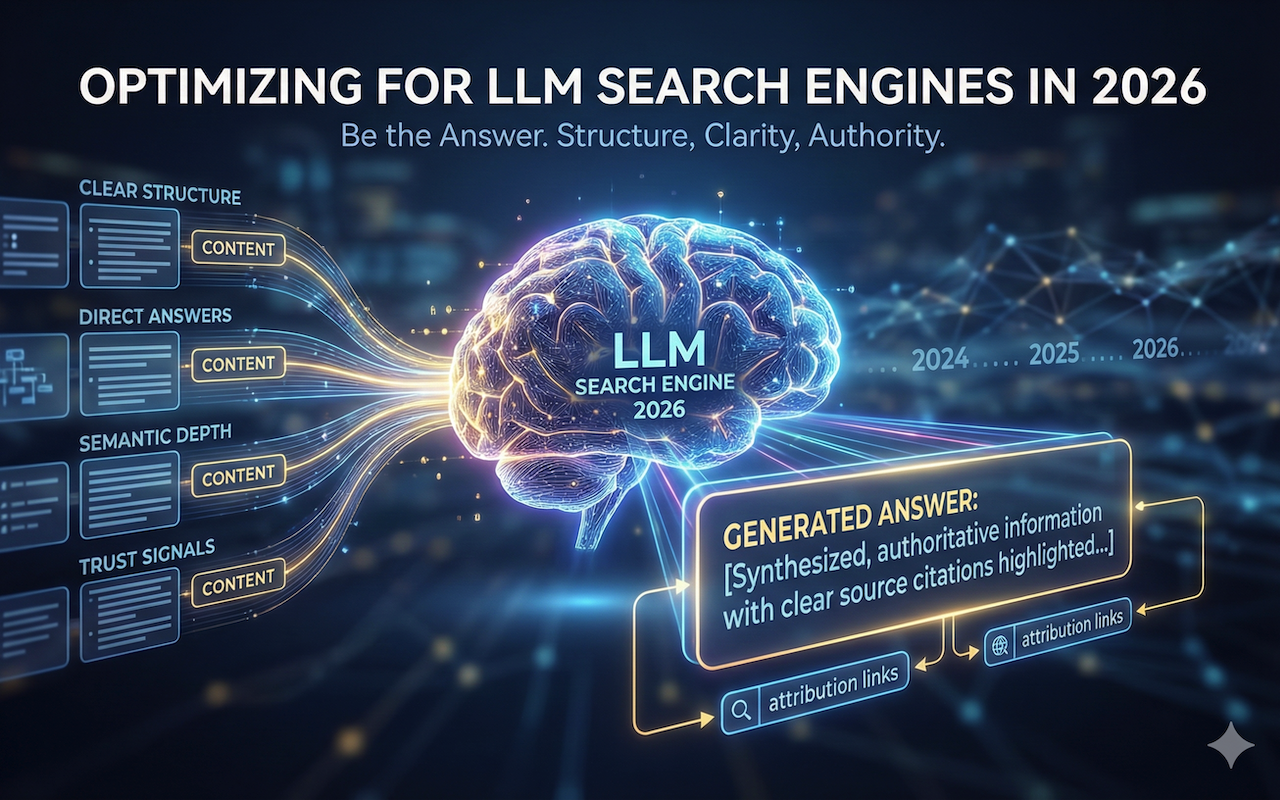

Search is no longer limited to ten blue links. Large language model–powered search engines are transforming how users discover information online. Instead of browsing pages, users now ask direct questions and receive fully formed answers. By 2026, visibility within these generated responses will be just as important as traditional rankings. Optimizing for Large Language Model (LLM) search engines requires a strategic shift in how content is written, structured, and validated.

This guide explains the concept in depth using clear points for easy understanding.

What Are LLM Search Engines?

LLM search engines use advanced language models to understand queries and generate human-like responses. Rather than directing users to multiple pages, they synthesize information from trusted sources and present a single, coherent answer.

Key Characteristics of LLM Search

- They prioritize direct answers over lists of links

- They interpret conversational and long-form queries

- They select and reference authoritative sources

- They focus on user intent rather than keyword matching

Because of this behavior, content must be created in a way that allows these systems to easily extract accurate and reliable information.

Why Optimizing for LLM Search Is Critical in 2026

Changing User Behavior

Users are increasingly turning to conversational search tools to save time. Instead of navigating multiple pages, they expect immediate clarity. This results in fewer clicks but increases the importance of being referenced as a source.

Reduced Dependence on Traditional Rankings

Ranking first on a search results page does not guarantee visibility within an LLM-generated response. Content is selected based on clarity, trust, and usefulness rather than position alone.

Authority Is the New Visibility

When an LLM cites your content, your brand becomes part of the answer. This builds long-term authority even if the user does not immediately visit your website.

Core Principles of LLM Search Optimization

Write for Questions, Not Keywords

LLM platforms respond best to content that mirrors how people naturally ask questions.

Focus areas include:

- Full-sentence queries

- Natural language phrasing

- Clear problem statements

- Direct, explanatory answers

Content should clearly address what, why, how, and when questions within the body text.

Provide Clear and Direct Answers Early

LLM systems scan content to identify the most concise and relevant explanations.

Best practices include:

- Answering the main question within the first few paragraphs

- Avoiding unnecessary storytelling before the explanation

- Writing definitions in simple, factual language

This increases the likelihood of your content being selected as a source.

Structuring Content for LLM Understanding

Use a Strong Content Hierarchy

Clear structure helps both readers and language models understand your content.

Essential structural elements include:

- One clear H1 that defines the topic

- H2 sections that represent major concepts

- H3 sections that break ideas into clear, explainable units

- A logical flow from basic to advanced concepts

Well-structured content is easier to summarize and reference.

Use Points and Lists for Concept Clarity

LLMs extract information more effectively from clearly separated points.

Use numbered or bulleted lists to:

- Explain processes

- Define benefits

- Outline steps

- Compare concepts

Each point should focus on a single idea and avoid mixing topics.

Content Depth and Semantic Coverage

Cover Topics Completely, Not Superficially

LLM search engines favor content that demonstrates a full understanding of a subject.

This includes:

- Clear definitions and context

- Practical implications

- Related subtopics

- Common misconceptions

Shallow content that simply repeats existing summaries is less likely to be referenced.

Use Related Concepts Naturally

Instead of repeating the same terms, include related phrases and supporting ideas, such as:

- Search intent

- Content credibility

- Source reliability

- Information accuracy

This semantic depth signals expertise.

Authority and Trust Signals

Demonstrate Real Expertise

LLMs prioritize content that reflects real-world experience and subject-matter knowledge.

Ways to demonstrate expertise include:

- Case-based explanations

- Industry-specific examples

- Data-driven insights

- A clear, professional tone

Avoid exaggerated claims or overly generic advice.

Strengthen Brand Presence Across the Web

LLM systems learn from multiple sources. A strong brand footprint improves credibility.

Important signals include:

- Mentions on reputable websites

- Citations in industry-related content

- Consistent brand messaging

- Clear author attribution

The more consistently your brand appears in trusted contexts, the more likely it is to be referenced.

Technical Foundations That Support LLM Visibility

Ensure Content Accessibility

Your content must be easily accessible to be included as a source.

Key technical requirements include:

- Fast-loading pages

- Mobile-friendly layouts

- Clean HTML structure

- Proper indexing

Strong technical foundations support content discovery.

Use Structured Data Where Relevant

Structured data helps systems understand the purpose of your content.

Useful applications include:

- Article schema

- FAQ sections

- How-to content

- Author information

This added context improves extraction accuracy.

Platform-Specific Optimization Strategies

Optimizing for Conversational Engines

Conversational platforms prefer content that feels natural and informative.

Best practices include:

- Clear explanations without unnecessary jargon

- Question-and-answer formatting

- Concise paragraph lengths

- Logical progression of ideas

This aligns closely with how responses are generated.

Optimizing for Citation-Based Engines

Some platforms prioritize transparency and source credibility.

To improve visibility:

- Reference reliable data

- Avoid unsupported opinions

- Use factual, neutral language

- Maintain accuracy throughout

Fact-focused content is more likely to be cited.

Measuring Success in LLM Search Optimization

Track Mentions, Not Just Traffic

Success metrics are evolving.

Key indicators now include:

- Brand mentions in generated answers

- Content citations

- Visibility across multiple platforms

- Authority growth over time

Traffic still matters, but visibility within answers is equally valuable.

Combine Traditional SEO With LLM Optimization

LLM optimization does not replace traditional SEO; it enhances it.

A balanced strategy includes:

- Technical optimization

- High-quality content

- Clear structure

- Authority building

Together, these ensure long-term discoverability.

Conclusion

Optimizing for LLM search engines in 2026 is about clarity, credibility, and completeness. Content must be structured, informative, and trustworthy to be selected as a source in generated answers. By focusing on user intent, semantic depth, strong formatting, and authoritative signals, brands can position themselves as reliable voices in an increasingly answer-driven search landscape.

Those who adapt early will not only remain visible but will become the answers users trust.

Featured Image generated by Google Gemini.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment